Launch Tests Notebooks from Azure pipelines

Architecture

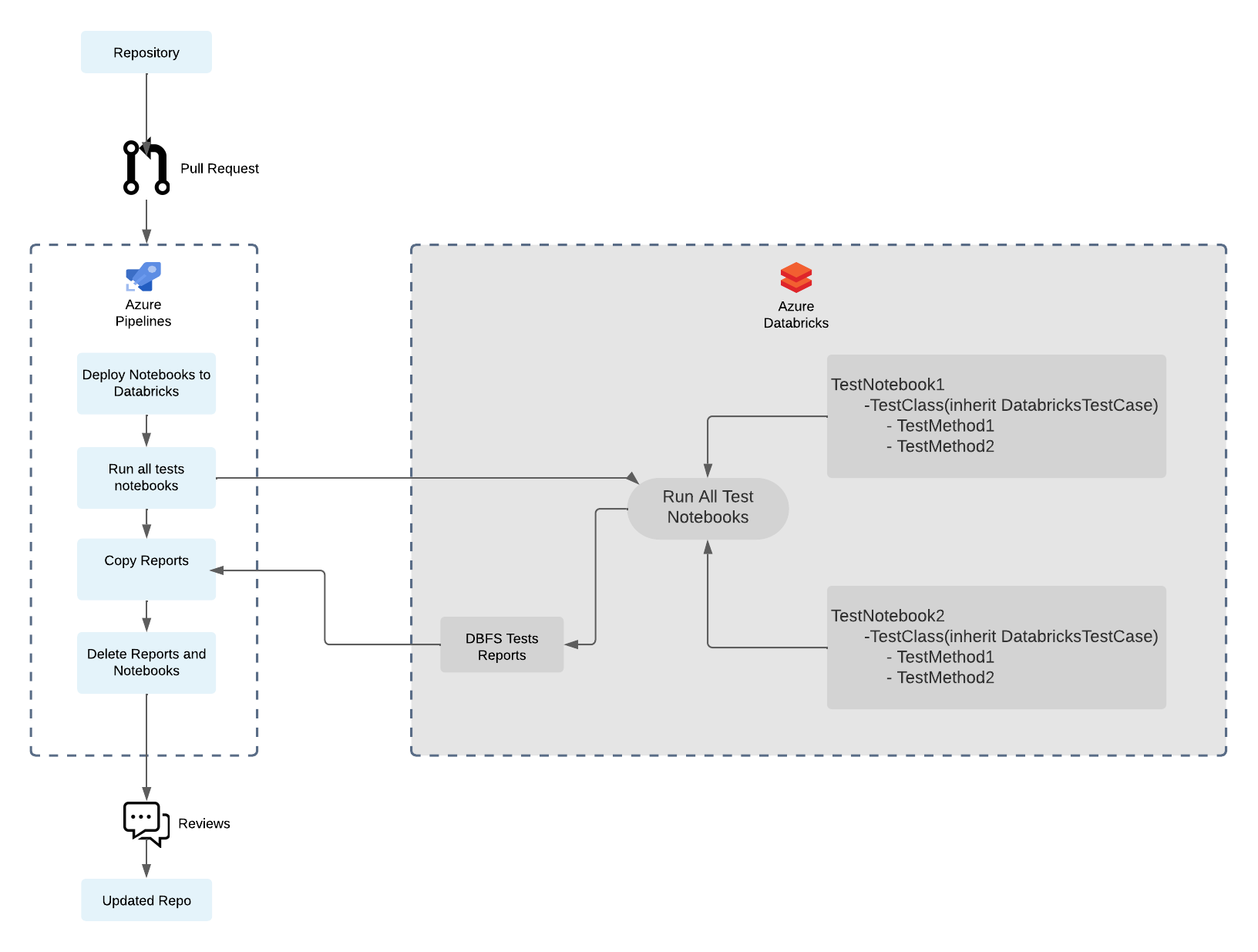

A workflow (Source: Unit Tests on Databricks) has been designed to test notebooks from Azure Pipelines:

In this architecture notebooks are saved as .py files an Azure Devops Repository, and are deployed to Databricks as Notebooks.

Then, the Azure pipeline uses Databricks Testing Tools to run all the test notebooks, on a Databricks cluster.

Finally, the Azure pipeline uses Databricks API to transfer test reports from Databricks to Azure Devops and clean the temporary environment created to run the tests

Launch tests: The Template

To automatically launch tests notebooks using Azure pipelines we provide a pipeline template that you can reference in your Azure pipeline.

The template called template-run-tests-notebooks.yml is available in the repository PR.Data.DataFoundation.DatabricksTestingTools.

What does the template

The template launch tests notebooks in several steps:

- Install pr-databricks-testing-tools

- Deploy the current Azure Devops Repos to Databricks Repos

- Launch tests notebooks from Databricks Testing Tools CLI, publish tests results in DBFS

- Get tests results and coverage reports from DBFS

- Publish Test Results to Azure Pipelines to provide a comprehensive test reporting and analytics experience in Azure Devops GUI.

How to use the template

The template expect the following parameters:

- databricksHost: required, the workspace url starting with https://

- databricksToken: required, the databricks personal access token for authentication

- databricksClusterId: required, the id of the cluster on which the tests will be launched

- localTestsDirectory: required, the Azure Devops Repo path directory to the tests notebooks

- databricksTestingToolsVersion: optional, the version of databricks testing tools package to use. If not specified, the latest version will be used

Here is how to use the template in your pipeline:

resources:

repositories:

- repository: templates # name to reference the DatabricksTestingTools repository

type: git

name: PR.Data.DataFoundation/PR.Data.DataFoundation.DatabricksTestingTools

pool:

vmImage: ubuntu-latest

steps:

- template: template-run-tests-notebooks.yml@templates

parameters:

databricksHost: $(databricks_host) # REQUIRED: the workspace url starting with https://

databricksToken: $(databricks_token) # REQUIRED: the databricks personal access token for authentication

databricksClusterId: $(databricks_cluster_id) # REQUIRED: the id of the cluster on which the tests will be launched

localTestsDirectory: tests # REQUIRED: the path directory of the test notebooks

databricksTestingToolsVersion: 0.1.2 # OPTIONAL: the version of databricks testing tools, if this parameter is not set the latest version is used

Make sure you define all the variables before launching the pipeline.

Full yaml template

# ***************

# template to extends in order to use launch tests notebooks with Databricks Testing Tools

# ***************

parameters:

- name: databricksHost # REQUIRED: the workspace url starting with https://

type: string

- name: databricksToken # REQUIRED: the databricks personal access token for authentication

type: string

- name: databricksClusterId # REQUIRED: the id of the cluster on which the tests will be launched

type: string

- name: databricksRepoPath # OPTIONAL: the databricks repo directory where the notebooks will be deployed

type: string

default: /Repos/PR.Data.Training

- name: localTestsDirectory # REQUIRED: the path directory of the test notebooks

type: string

- name: databricksTestingToolsVersion # OPTIONAL: the databricks testing tools version

type: string

default: ''

steps:

- task: UsePythonVersion@0

inputs:

versionSpec: '3.8'

displayName: 'Use Python 3.8'

- task: PipAuthenticate@1

inputs:

artifactFeeds: 'pernod-ricard-python-data'

onlyAddExtraIndex: true

- script: |

pip install artifacts-keyring

version=${{ parameters.databricksTestingToolsVersion }}

if [[ -z $version ]]

then

pip install pr-databricks-testing-tools --extra-index-url https://pkgs.dev.azure.com/pernod-ricard-data/PR.Data.DataFoundation/_packaging/pernod-ricard-python-data/pypi/simple

else

pip install pr-databricks-testing-tools==${{ parameters.databricksTestingToolsVersion }} --extra-index-url https://pkgs.dev.azure.com/pernod-ricard-data/PR.Data.DataFoundation/_packaging/pernod-ricard-python-data/pypi/simple

fi

displayName: 'Install databricks testing tools'

env:

ARTIFACTS_KEYRING_NONINTERACTIVE_MODE: true

- script: |

echo ${{ parameters.databricksToken }} > token.txt

databricks configure --host ${{ parameters.databricksHost }} --token-file token.txt

displayName: 'Configure databricks cli'

- script: |

databricks repos create --url $(Build.Repository.URI) --provider azureDevOpsServices --path ${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)

if [[ $(Build.SourceBranch) == refs/heads/* ]]

then

databricks repos update --path ${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId) --branch $(echo $(Build.SourceBranch) | sed "s/refs\/heads\///")

else

databricks repos update --path ${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId) --branch $(echo $($(System.PullRequest.TargetBranch)) | sed "s/refs\/pull\///")

fi

displayName: 'Deploy to Azure Repos'

- script: |

databricks_testing_tools --tests-dir ${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)/${{ parameters.localTestsDirectory }} --cluster-id ${{ parameters.databricksClusterId }} --output-dir /dbfs${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)

displayName: 'Execute all notebook tests'

env:

DATABRICKS_HOST: ${{ parameters.databricksHost }}

DATABRICKS_TOKEN: ${{ parameters.databricksToken }}

- script: |

echo dbfs:${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)

databricks fs cp dbfs:${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)/ $(System.DefaultWorkingDirectory)/result -r

databricks fs rm -r dbfs:${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)

databricks repos delete --path ${{ parameters.databricksRepoPath }}/Test_$(Build.BuildId)

displayName: 'Get test results and clean environment'

- task: PublishTestResults@2

inputs:

testResultsFormat: 'JUnit'

testResultsFiles: '**/TEST-*.xml'

searchFolder: '$(System.DefaultWorkingDirectory)/result/'

mergeTestResults: true

failTaskOnFailedTests: true